Price Scraping: Definition, Use Cases, Challenges & Solutions

Online retailers and eCommerce professionals in general are required every day to understand more technological nuances.

Some say this is the cost of technological advancement and the effort to automate as many tasks as possible. Automation does bring numerous benefits to eCommerce professionals—increased productivity being probably the most important one.

This is where price scraping comes in. It greatly increases the efficiency of performing competitive analysis. Manual price tracking tasks that used to take hours or days, now take minutes.

Keep reading to learn what price scraping is, how it works, what are some of the most common challenges related to it and how to overcome them.

What is price scraping?

Price scraping is a process of extracting pricing data from a website using specialized bots and/or crawlers.

Most commonly, it’s used by eCommerce businesses and online retailers to keep track of the competition. More precisely, it is used to note down price fluctuations for certain products, categories, or brands.

This enables the users of a price scraping tool to decipher competitors’ pricing strategies and adapt their own.

In other words, price scraping is the crucial step towards automating the competitor price monitoring process.

A (brief) tech background of price scraping

Price scraping is a subset of web scraping. As such, it shares many common characteristics and required steps to perform.

Simply put, web scraping is a way of getting data from websites, most commonly for the purposes of research, competitive analysis, and even training various AI models.

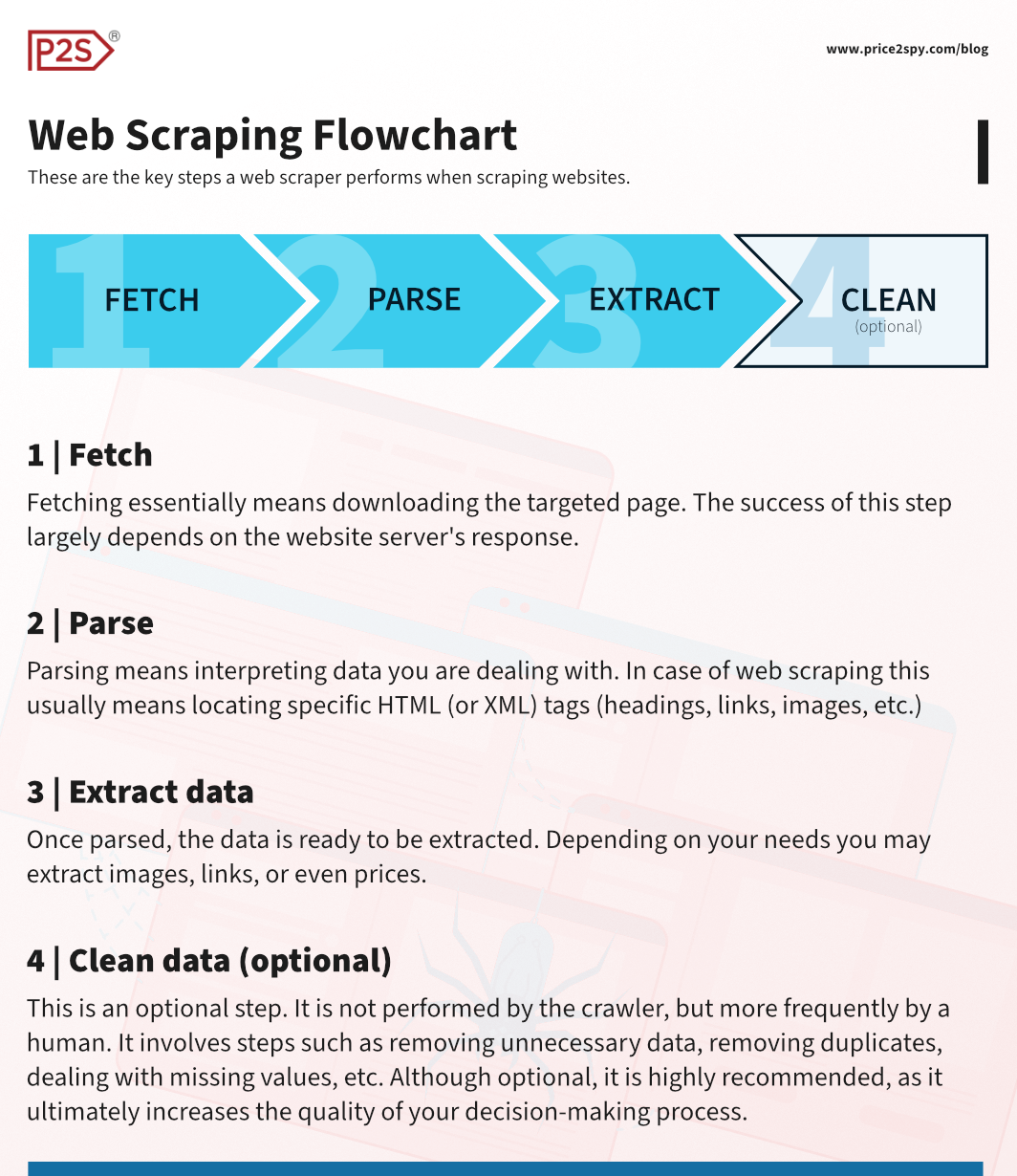

More technically, web scraping is the process of automated data extraction from websites using bots (known as crawlers or scrapers). Most commonly, it entails the following steps:

- Fetching the content – The scraper sends a request to the target website’s server. Then, depending on the HTTP response, the page, together with its content, is fetched in the HTML (or XML) format.

- Parsing the content – The second step—parsing—essentially means interpreting the HTML content and making sense of it. Usually it means locating specific elements (HTML tags) such as headings, paragraphs, and images.

- Data extraction – Once the specific elements have been found, the data itself is ready for extraction. Depending on your needs, you may extract text (including prices), links, images, or anything else on the page. Some tools are even able to capture prices shown as images.

- Cleaning, organizing, and structuring the data – Data gathered in step 3 is usually referred to as ‘raw data’. This means it requires some “polishing” and cleaning before it’s useful. This can mean deleting unnecessary spaces, dealing with missing values, reformatting, removing unnecessary data, and validating the results.

This is the backbone of the web and price scraping process. This process can be complicated by numerous factors starting from simple things such as pagination to advanced anti-bot measures on a website. We’ll get to price scraping challenges later on in the article.

Price Scraping Methods

Using a specialized price scraping tool

As opposed to general web scrapers, specialized price scraping tools are designed for eCommerce professionals in mind.

These tools overcome price scraping challenges more easily than the general web scrapers. Besides, due to the experience with similar clients, the time to set up these tools is the lowest. Additionally, there’s usually a set of analytical tools integrated with the price scraping capabilities.

However, if you are not interested in accessing the tool’s interface, be on the lookout for if it offers API integration.

The downside of these tools is that if you ever wish to go beyond price scraping, some may or may not be flexible enough.

Using a general web scraper

General web scrapers are created with the purpose of extracting any data from online sources. This goes beyond prices and includes images, product descriptions, stock availability, and more.

This is an easier approach than building your own tool. Also, if you require more than just pricing data, general web scraping tools will likely be able to help you.

Since these tools offer a broader set of scraping options, they frequently are the most expensive approach. Some are also limited in terms of how customizable they are when it comes to focusing on pricing data.

Writing your own price scraper

This approach requires some technical expertise or background. The main benefit is that you keep a maximal level of control over what and how is scraped.

Most commonly, it is built with Python using libraries such as Beautiful Soup, Scrapy, or Selenium.

There are numerous technical guides and courses out there if you are interested in giving it a try.

However, it does come with a few downsides.

First of all, there are the maintenance costs. You will have to find a way to deal with all the changes on the targeted websites. Also, whenever you wish to add another website to the list of monitored ones, there’s a high chance you will have to update your code.

Besides that, you will also need to find a way around the bot protection solutions each website may have (eg. CAPTCHA).

Additionally, it is on you to watch out for any ethical or legal obstacles when performing price scraping with your own tool.

Manual “price scraping”

Tracking competitor prices manually is not exactly scraping, per se. However, it is worth mentioning it here, as there are many eCommerce businesses who are gathering competitive intelligence this way.

This approach does make sense for smaller businesses. With a small product assortment and not many competitors, it doesn’t necessarily take a significant amount of time. It’s relatively simple to perform, too.

However, when you start to deal with more and more competitors (or resellers if you are a brand), automation is the way to go.

Price scraping challenges

Anti-bot (anti-scraping) measures

Web scrapers (or crawlers) are types of bots. Usually, they are the unwanted type of traffic to a website. This is why websites implement different solutions for preventing bots from accessing them.

You have likely heard of CAPTCHAs, but there’s also IP blocking, human behavior modelling, dynamic content loading, and more.

Dealing with these obstacles requires sufficient expertise, infrastructure, and resources. Specialized price scraping tools usually have teams dedicated to these issues.

Url changes

Whenever a URL changes, the price scraper encounters an issue. This can include changing the domain, page path, introducing or changing the rules of dynamic URLs, and other general URL-structure changes.

There’s no easy way to circumvent this. This fact means that it is required to do a regular upkeep and auditing of the scraping scripts to account for URL changes.

A tip, if you are creating a scraper of your own, is to implement a scraper with XPath/CSS selector logic to dynamically locate elements, as opposed to relying on URLs exclusively.

Page structure changes

Changes in HTML tags, page layout, or scripts can cause issues with price scraping. When such a change occurs, the price scraper won’t be able to find the required piece of data at the usual location (the one it was programmed to find it at)

There’s no simple solution here. An update to the script is required whenever such a problematic change occurs. This update is done either automatically or manually.

Specialized price scraping tools are more adaptable to these types of changes. General web scrapers on the other hand may require technical expertise to a certain extent. Also, these tools may be increasingly likely to fail if the page structure changes become more frequent.

Missing or incorrect Universal Product Codes (UPCs)

If products on the scraped website have missing or incorrect UPCs the price scraping process becomes slightly more difficult.

This is especially true for the product matching process – the step that usually precedes price scraping. The certainty that the scraped prices correspond to the correct products becomes lower (especially true if products have variations such as size, quantity, or color).

These issues subsequently impact the overall data accuracy and the difficulty of automating the price scraping process.

Here one solution would be to rely on someone to manually update and verify these parameters. Another approach would be to rely on a specialized price scraping tool with automated product matching capabilities.

Conclusion

We have shown that within the right context, price scrapers are a powerful tool for gathering competitive intelligence quickly.

In the current eCommerce environment, almost every large online retailer is monitoring competitor prices. Similar thing applies for brands, as well. Brands use price scraping to monitor MAP violations and uphold their brand value.

Depending on your needs, you may choose to go for a dedicated & specialized price scraping tool, a general web scraping tool, or give it a shot and try to build a scraper yourself.

The decision is on you and largely depends on your needs and resources. One common thread in this decision-making process is that the larger the company, the more sense it makes to go for a specialized eCommerce price scraper.